Secure Your Applications Through DevSecOps and ‘Shift Left/Shift Right’ Security

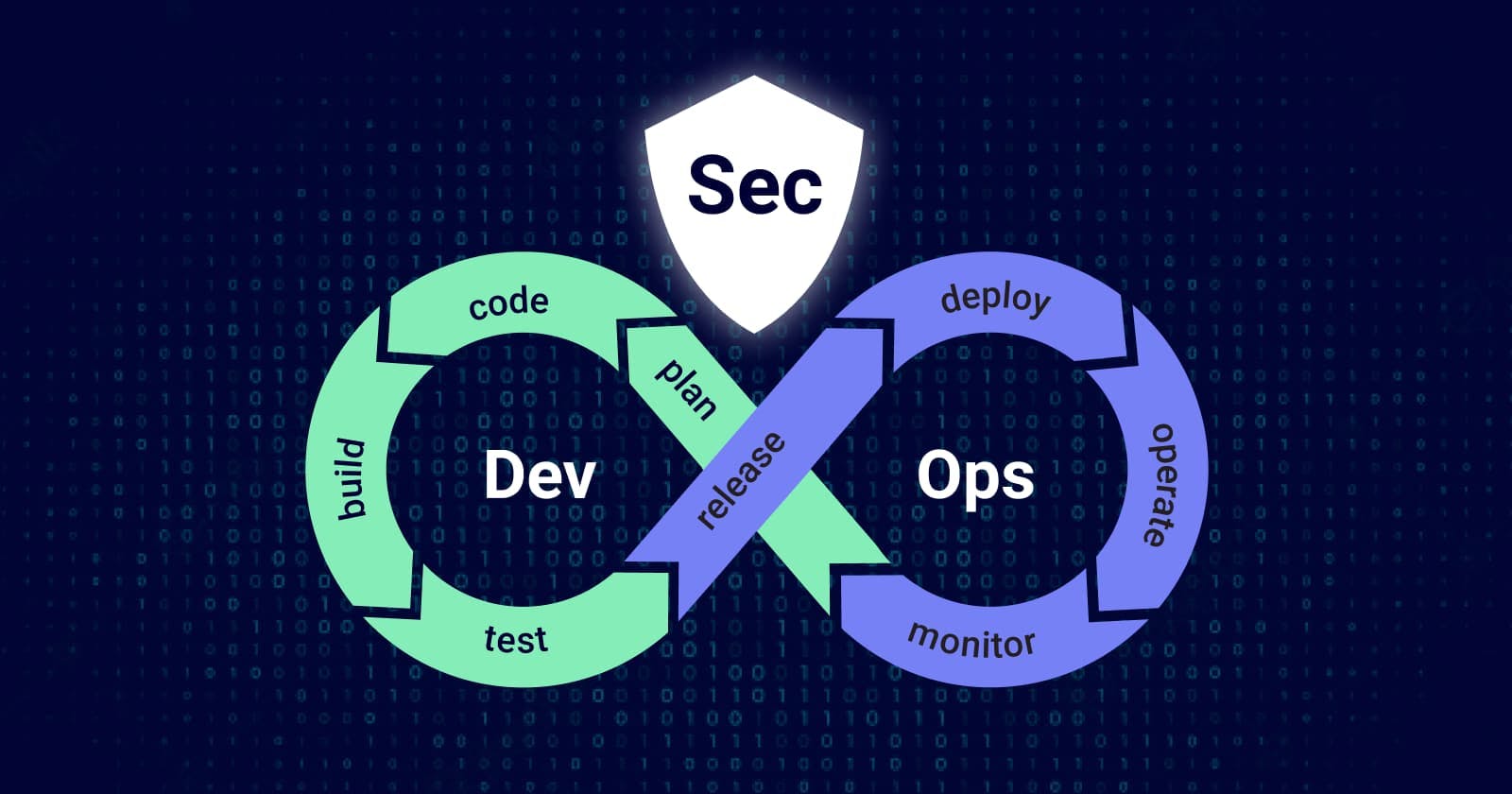

Building software is difficult enough without having to worry about managing the security considerations of an ongoing project. Development Security Operations (DevSecOps) is much more than bolting security monitors and tools to your current application.

DevSecOps shifts development security testing to the left. This “shift left” approach ensures fixes are applied earlier in the development process. An extension of this, “shifting right” means development no longer just passes a new feature over to operations when it’s ready to deploy; they "test in production" by actively and continuously risk-assessing, analyzing, logging, monitoring, and fixing.

What is DevSecOps?

The process of DevSecOps, a targeted specialty branching from DevOps, uses an interactive approach to product security. Rather than layering security over a finished product, DevSecOps encourages the consideration and addition of security throughout a project’s entire lifecycle.

DevSecOps uses a process of integration at each step in development to bake security into the product. Security considerations become top of mind at the beginning of a development process instead of the end. This ensures that every part of the application is secure and there is no need to secure the application at the end of the development lifecycle.

The common processes involved in DevSecOps include:

(CWE)

Threat Modeling

Automated Security Tests

Incident Management

CWE is a sort of dictionary of weaknesses that the software and development community has gathered to describe potential vulnerabilities in a software project. A great resource is provided by Mitre, who keeps a regularly updated CWE.

Threat Modeling is the process of “planning” attacks on various parts of a piece of software. By planning for these threats ahead of time, you can easily control them on a granular level, should the need to handle an attack arise.

Automated security testing is the process of running various security audits and tests on code as it is written. This process is automated and ensures that code is constantly stress-tested as it joins the project.

Incident management is a framework for the reporting and control of incidents associated with a piece of software. This allows admins to have a single source of information for reacting to security issues.

A typical DevSecOps implementation consists of several steps:

Developers commit their code and create a pull request

Another developer performs a code peer-review and assesses security risks associated with the latest commit

If the code passes, it’s merged, an instance is created, pre-loaded with a suite of test tools

The build runs all unit tests, including security tests

If the tests pass, the code is deployed to staging, and then to production

Automated security monitors the production environment, ready to notify the team of any potential security risks

If an exploit is found, the system will roll back to the previous build. The original developer is notified of the issue, and the entire process repeats from the first step

Why is DevSecOps important?

DevSecOps allows for faster issue remediation and increases the coverage for standard security processes. In other words, you get more bang for your buck when it comes to implementing standard security processes because they are run again and again on the same code.

It’s far easier to catch security problems before the later stages in the software development lifecycle, which means you save money and fix bugs faster. With several new tools, it is not difficult to implement.

How does OWASP ASVS help?

One of the easiest ways to implement DevSecOps is through the OWASP Application Security Verification Standard (ASVS). The Open Web Application Security Project® (OWASP) is an organization standardizing and promoting application security. Their practices have been adopted by enterprises that care about cyber security and protecting sensitive data. As Quantum Mob’s de facto choice, ASVS is leveraged for all of our Node.js implementations. It is well-established and ensures security throughout the build process.

The Application Security Verification Standard defines three security verification levels, with each level increasing in depth. These levels are defined by OWASP as:

Level 1 is for low assurance levels and is completely penetration testable

Level 2 is for applications that contain sensitive data, which requires protection and is the recommended level for most apps

Level 3 is for the most critical applications - applications that perform high-value transactions, contain sensitive medical data, or any application that requires the highest level of trust.

While there are three levels of ASVS, most implementations will want to focus on Level 2.

Five important ASVS requirements

As you can see above, there are many ASVS requirements available, and running through them all is counter-productive. That said, you should remember these five important requirements that every project owner should keep in mind while building.

- Architecture, Design and Threat Modeling Requirements

Everyone should be using a source code control system, with procedures to ensure that check-ins are accompanied by issues or change tickets. This ensures that checked code can be iterated as the app is built.

Files originating from end-users should be served by either octet-stream downloads, or from an unrelated domain, such as a cloud file storage bucket. This ensures that potentially harmful files don’t end up on the main server.

- Authentication Verification Requirements

Verify that shared or default accounts are not present (root, admin) in any production software. This reduces the possibility that hackers can take full control of the system through an administrator account.

Band verifiers should expire out of band authentication requests, codes, or tokens after 10 minutes. This reduces the window available to most attacks.

- Session Management Verification Requirements

Never reveal session tokens in URL parameters. Session tokens are equivalent to a password and spoofers could use them to pretend to be another user.

Verify authentication after 30 minutes of inactivity or 12 hours of activity. This ensures your machine can’t be compromised when it is not being used.

- Validation, Sanitization, and Encoding Verification Requirements

Basics include sanitizing unstructured data to enforce safety measures such as allowed characters and length, and protecting against database injection attacks.

It also requires that URL redirects and forwards only allowed destinations that appear on an allow list, or show a warning when redirecting to potentially untrusted content.

- Error Handling and Logging Verification Requirements

Almost every developer understands they should never log credentials or payment details. Ensure that all payment details are secure and out of public logs.

Always implement a "last resort" error handler which will catch all unhandled exceptions, especially when these exceptions could potentially crash the system and allow unauthorized access.

The best part of these requirements is that they are the security equivalent of rules of thumb. They’re easy to understand and allow developers to build security right into their products before there is any chance for their production code to be exploited. While not every project is the same, all secure products use these simple rules to keep their users safe and secure.

Focus on what matters

As software complexity increases for an application, securing that application cannot increase alongside linearly. Add Shift Left and Shift Right practices to your current development cycle, and have your team see the value in this approach. When security is handled in every step of development, teams can focus on what really matters: the product.